How to Create a Codebook to Analyze Open-Ended Responses (with AI)

Barbara Houdayer

Head of Marketing

If you’re working with open-ended responses, your first goal should be clear: build a codebook that’s MECE (Mutually Exclusive, Collectively Exhaustive).

Maybe it's a customer feedback survey. Maybe it's employee sentiment. Whatever you’ve managed to pull together, we’re sorry to tell you that the hard part isn’t over. The most valuable (and potentially the most frustrating) phase starts now: the analysis.

As anyone who’s ever worked with open-ended feedback will tell you: it’s messy, extremely nuanced, hard to quantify, and still 100% worth it. It’s packed with pure gold. The kind of insight that structured data often misses. Like this absolute treasure below.

But let’s be honest though, open-ended feedback tends to look more like this. 👇

And no, that doesn’t make the feedback any less valuable. Just a little harder to work with. One response is easy, but even a small batch can raise a few questions. Like “Are these comments saying the same thing?” or “Should they be coded the same way?”

Open-ended feedback is tricky. Mainly because people rarely use the same words to express the same idea. One person might say “expensive,” while another says “not affordable,” followed by a third one saying “pricey for what it offers.” Same theme, different phrasing.

Now imagine sorting through thousands upon thousands of these. Well, you're in luck. Nowadays, with the power of AI, you don’t have to do it manually. But you do need a codebook (because even AI needs a bit of structure).

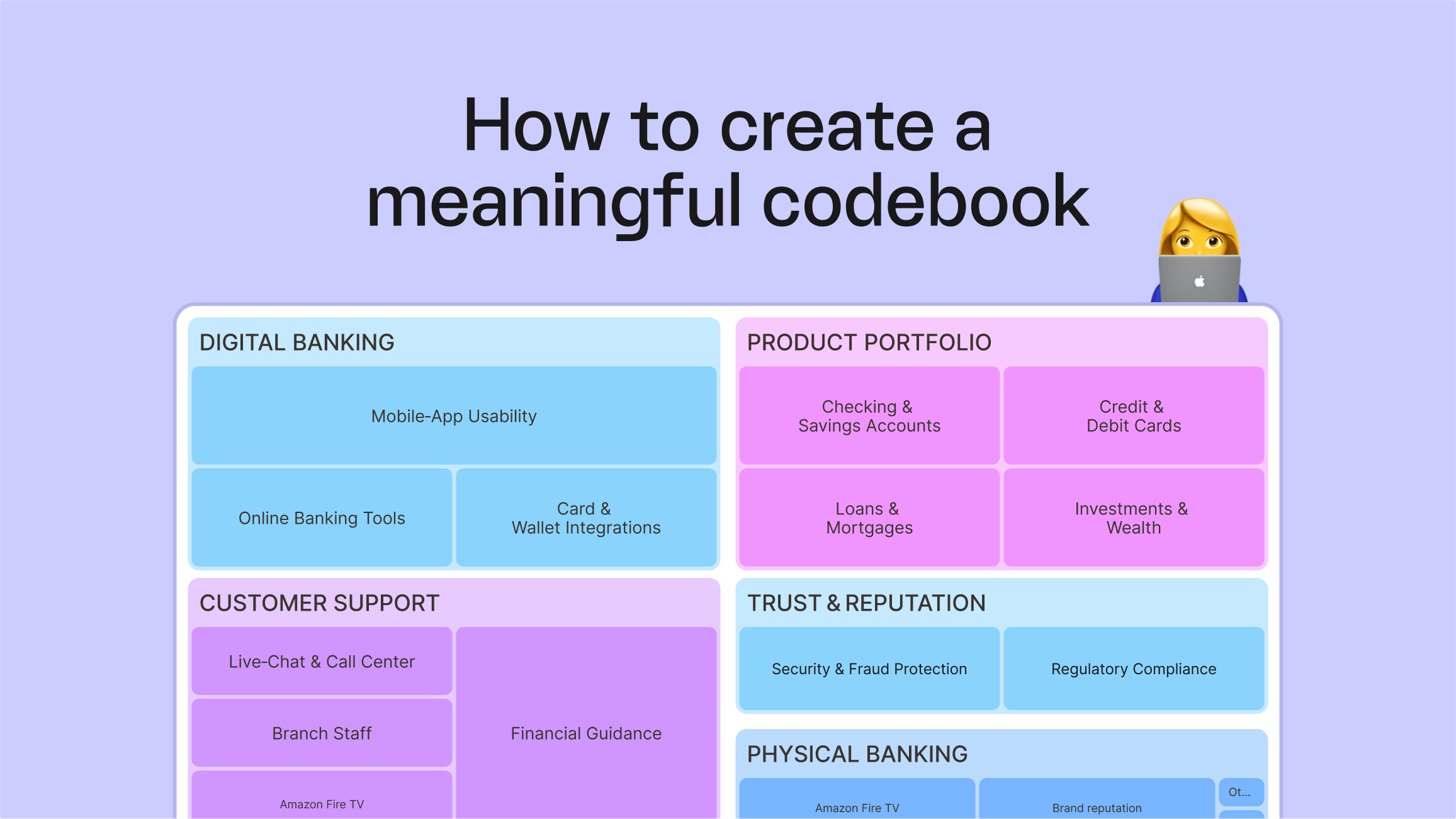

What is a codebook?

A codebook is a structured list of codes (think of them as topics, themes, or nets) that helps researchers categorize open-ended responses into meaningful groups.

Essentially, a codebook tells you what to look for, how to label it, and how to do that consistently across various teams, markets, and survey types. But more importantly, it enables you to quantify what people are saying and measure how those themes evolve.

Each open-ended response can contain multiple topics (or codes). A codebook helps you label topics consistently. Once coded (e.g., “Pricing: Value for Money”), that topic becomes something you can count, compare, and continuously track.

A codebook helps you draw the outlines of different categories. Without it, analysts might tag responses like these differently or miss the bigger picture entirely. With a codebook, you bring more clarity, structure, and consistency to the process.

What makes a codebook effective?

A good codebook does a lot of heavy lifting.

It starts with being MECE (Mutually Exclusive and Collectively Exhaustive)

Each comment fits into only one code at a time (with no overlap)

All meaningful themes are captured (with no insight left behind)

It defines clear code labels, definitions, and examples so that different analysts can apply them in the same way

With a shared understanding, everyone can “speak the same language”

Regardless of market, timing, or team; everyone’s stays forever aligned

It makes feedback analysis faster, more repeatable, and more transparent

It can help track sentiment across topics (especially useful when coding things like "Customer Support" as both praise and complaint)

With the right codebook, your analysis becomes something you can trust, replicate, and share — across teams, countries, and time. It’s an incredibly valuable part of your analysis.

The Two Coding Approaches: Inductive vs Deductive

Before you build your codebook, it’s worth understanding the two main coding strategies: inductive and deductive. And no, these aren’t just academic distinctions. They influence how you set up your analysis, and how AI can support you.

Inductive Coding

Inductive coding is a bottom-up process. You look at your data with fresh eyes and let the themes come to you organically. No predefined labels, and no assumptions.

💡 Useful when:

• You’re exploring a new dataset,

• You don’t know what themes to expect

• You want the data to shape your codebook. Not the other way around.

Tools like Caplena and (to a certain extent) general-purpose LLMs are really good at helping you identify patterns in large volumes of text.

AI makes inductive coding faster and easier to scale. It can spot patterns in how people phrase similar ideas, suggest draft topics, and help you build a first version of your codebook in minutes. It also accelerates theme discovery, so you spend less time sorting and more time interpreting.

But even with AI, you’re still in control. You decide which clusters make sense, what needs renaming or splitting, and how topics align with your goals. Inductive coding remains a human-led, flexible approach ideal for exploring new datasets or forming fresh hypotheses.

Deductive Coding

Deductive coding flips the script. You come in with a predefined codebook — maybe from previous studies, company taxonomies, or specific business questions you want to answer.

💡 Useful when:

• You need consistency across studies (e.g., in tracker surveys)

• You already know the themes you care about (e.g., Support, UX, Pricing)

• You’re testing a hypothesis and want to measure how often certain themes show up.

AI supports deductive coding by matching text responses to your existing set of topics. You can also instruct it directly. For example, you can prompt it to “classify it into one of the following: A, B, or C”. When responses don’t fit neatly, AI can suggest refinements or highlight mismatches, giving you a chance to adjust your codebook accordingly.

Even deductive workflows benefit from iteration. Especially when your initial codes are too broad or when respondents use unexpected language to describe known issues.

So, which one should you use?

Most of the time, it’s actually not an either/or. Many teams start inductively to discover what’s in the data. Then they refine their codebook and move into a more deductive mode for the sake of consistency. Caplena was designed for this mixed workflow. With our platform, you can build a topic collection from scratch or start with a structure and refine it over time.

"There are tools that only do topic discovery… and others where you already know what you’re looking for. Caplena let us do both, and mix the two. It gave non-technical people the power to steer the model.

There are tools that only do topic discovery… and others where you already know what you’re looking for. Caplena let us do both, and mix the two. It gave non-technical people the power to steer the model.

Caplena user

in the Financial Services Industry

Inductive vs Deductive: A Quick Comparison

| Methodology | Inductive Coding | Deductive Coding |

| Approach | Bottom-up (themes emerge from data) | Top-down (themes predefined) |

| Best for | New datasets, open exploration | Tracker studies, hypothesis testing |

| AI Use Case | Suggest topics, cluster responses | Match to existing labels, prompt-based coding |

| Human Role | Interpret, merge, define new codes | Refine, validate, ensure consistency |

| Caplena Fit | Strong clustering, topic generation | Label matching, sentiment tracking |

5 Codebook Challenges AI Can Help You Beat

Building a reliable codebook is both an art and a science. It demands precision, empathy, and iteration at scale. AI tools can dramatically improve this process. But, as you might have guessed, not all of these tools are created equal. Here’s how different categories stack up.

| Tool Type ⚙️ | Best For ❤️ | Pros 👍 | Cons 👎 |

| AI-Powered Platforms (Caplena, Chattermill, Canvs.ai) | Professional research and CX teams analyzing open-ends | Fast, scalable, collaborative workflows | Subscription-based, some onboarding needed |

| LLMs (ChatGPT, Claude, Gemini) | Solo researchers, quick exploration | Flexible, easy to access | Unstructured output, limited transparency, lacks MECE structure |

| Qualitative Data Analysis Software (NVivo, Atlas.ti, Delve) | An in-depth analysis of interviews or focus groups | Deep manual control, rigorous coding structures | Steep learning curve, slower workflows |

| Lightweight Tools (SurveyMonkey, Dovetail) | Small projects, basic tagging | Simple, easy to use | Not suitable for large datasets or tracking studies |

Now let’s dig into the five most common challenges we’ve seen make or break a codebook, and show you how AI can actually help you overcome them.

Challenge #1: Preparing the Data

Before you start coding, you need to prepare the data. And that’s not always an easy task.

You need to clean and anonymize unstructured data

You need to translate responses where needed, especially for multi-language datasets

You need to make sure columns are labeled right

You need to include metadata like NPS scores, segments, or source type

⛔ Common Problem: You have different formats, an unclear structure, and need to do manual cleaning. This all eats up hours. And you risk inconsistency from the start.

💡 AI Solution: Tools like Caplena offer automated formatting, smart column creation, and metadata linking. This turns scattered spreadsheets into a clean, analyzable dataset.

Challenge #2: Creating a Good First Codeframe

Here’s where the real work begins: deciding what you’ll code for. A solid codeframe needs to be broad enough to capture variation, but focused enough to stay actionable. That’s not easy when you’re dealing with hundreds or thousands of open responses.

⛔ Common Problem: Manually reviewing responses to generate codes is slow and biased. Analysts might create overlapping or redundant topics or miss themes buried in the data.

🤖 (Bonus) LLM Workflow: A practical approach with general LLMs is to process your data in smaller chunks. This helps surface relevant topics without overwhelming the model.

Split your dataset into batches

Let the LLM suggest topics for each batch

Collect, consolidate, and clean the full list

Reduce to a focused set of 30–60 topics, depending on context

💡 Purpose-built AI Solution: Caplena (including a few others) automates this workflow and scales it up using proprietary artificial intelligence that go beyond what generic LLMs can do. You get full coverage and statistical relevance right from the start.

Analyze the entire dataset at once

Generate a MECE codeframe instantly

Review, merge, and fine-tune similar topics in a guided interface

Get a clean, verified MECE codeframe in just a few clicks

Track sentiment within each topic to avoid code sprawl

Caplena supports sentiment tracking at topic level. So instead of splitting “Pricing: Expensive” and “Pricing: Good value,” you can track both sentiments under one code: “Pricing: Value for Money”.

And to steer topic detection exactly where you want it, Caplena supports prompt-based topic detection. Just tell the system what you’re after (e.g., pricing concerns, key pain points), test it on a sample of your data, and once you’re happy with the results apply the topics to your full dataset.

"The whole system is just easy to use. It’s easy to upload stuff. It’s easy to append. It’s easy to take that initial suggestion of a frame and work it into something that fits better with what you’re trying to do.

The whole system is just easy to use. It’s easy to upload stuff. It’s easy to append. It’s easy to take that initial suggestion of a frame and work it into something that fits better with what you’re trying to do.

Billy Budd

Customer Insights Manager

Challenge #3: Achieving MECE

Let’s talk about what we think separates “good enough” from amazing: a MECE codebook.

Mutually Exclusive: no overlap between codes

Collectively Exhaustive: no important themes left out

Getting this right improves your coding consistency across teams, brings clarity when sharing insights with stakeholders, and builds confidence that the analysis will actually hold up under scrutiny. But it’s hard work. Especially with generic AI tools that can’t always understand subtle semantic overlaps.

Try generating topics with an LLM three times on the same data. You’ll likely get three different results. That inconsistency makes it nearly impossible to build a reliable, MECE codebook without a structured workflow around it.

⛔ Common Problem: You end up with codes like “UX” and “User Interface” or “Customer Support” and “Help Center” which are overlapping concepts that can really muddy the results.

💡 AI Solution: Caplena is built to help you create MECE topic collections and spot semantic overlap. With our platform, you can easily merge similar topics, break down broad clusters into sub-themes, and visualize how topics relate or co-occur.

It’s this flexibility and its transparent modeling that turn AI from a black box into a co-pilot.

It also allows you to build coding hierarchies so your topics turn into meaningful categories without losing their detail.

Challenge #4: Maintaining Transparency (with AI)

A common question in CX and insights team circles is “can I trust what this AI is doing under the hood?” The short answer is: If your code assignments aren’t explainable, they aren’t defensible — not to stakeholders, not to clients, not even to yourself.

⛔ Common Problem: Generic LLMs tend to give you summaries without justification. Tools that suggest codes but don’t show their reasoning can’t be trusted in regulated or high-stakes environments.

💡 AI Solution: Caplena, on the other hand, tracks why a response was coded a certain way, where it overlaps with other topics, and how sentiment was derived. Every topic suggestion, assignment, and edit can be traced. That means no guessing, and no “black box” assumptions.

"Before, if someone was sick, coding stopped. Now. with Caplena, insights flow regardless.

Before, if someone was sick, coding stopped. Now. with Caplena, insights flow regardless.

Billy Budd

Customer Insights Manager

Challenge #5: Keeping Your Codebook Continuously Relevant

If you’re running tracker studies (like NPS, CSAT, and brand health) or regularly analyzing reviews, your codebook just can’t be static. Customer needs evolves over time. New pain points emerge, and priorities shift.

⛔ Common Problem: Manual updates are rough. Analysts forget why changes were made in the first place. And version control becomes a mess.

💡 AI Solution: Caplena can help you set alerts when new patterns appear, flag low-activity codes to review, and maintain version history and rationale behind changes.

It’s not about always having one perfect codebook. It’s about continuous relevance, especially when managing multi-market, multi-language studies.

"With Caplena, Project Managers get an initial accurate codebook in less than 10 minutes. A real time saver in consulting projects.

With Caplena, Project Managers get an initial accurate codebook in less than 10 minutes. A real time saver in consulting projects.

Alexandra Buzea

Senior Global Consultant – Consumer Insights

How to Create a Codebook with Caplena

It’s easy to assume that a platform this advanced comes with a steep setup curve. Luckily, that’s not the case. Once you’ve cleaned your data and decided whether to take an inductive or deductive approach (or a mix of both), here’s how you can build a high-quality, MECE-compliant codebook with Caplena in no-time.

1. Upload Your Data

Import your survey responses, reviews, or interview transcripts. Caplena supports multilingual data and lets you include metadata like NPS, region, or product version for filtering.

2. Generate Topic Suggestions

Caplena’s AI scans the data and suggests initial topics based on meaning. Each suggestion comes with a quality score between 0 and 100, helping you assess confidence and adjust as needed — with 70 roughly representing the level of human coding.

3. Review and Refine

Merge similar topics, rename them for clarity, and split any broad ones. This step is fully interactive and human-guided for you to add that golden touch. You can also prompt it manually using the Topic Assistant tool.

4. Enable Sentiment Tracking

Caplena can automatically tag each topic mention as positive, neutral, or negative, so you don’t need separate codes for sentiment.

5. Iterate and Evolve

As feedback changes, Caplena helps you spot new themes, manage version history, and keep your codebook relevant over time.

Build Your Codebook with Caplena

Open-ended feedback isn’t going away. If anything, it’s growing in importance. Because behind every number in a survey, there’s a story waiting to be told. And to get from raw text to insights that drive change, you need more than instinct. You need structure, speed, consistency, and trust.

That’s what a solid codebook gives you. And it’s what Caplena helps you achieve from day one.

Analyze themes and sentiment across thousands of responses

Build MECE-compliant topic collections that hold up across time, teams, and languages

Stay in control of the AI: prompt it, steer it, fine-tune it, and always understand why it did what it did

Cut manual effort and reduce bias while increasing the depth, speed, and transparency of your insights

Whether you’re coding a one-off study or managing a tracker in 64 countries, Caplena adapts to your workflow and gets better with you. If you're tired of second-guessing your codes, getting stuck in spreadsheets, or just want your team to move faster with more confidence — you're not alone. The good news is: we can help.

Go ahead and book a call today, or check out how our customers are using our platform to improve their feedback analysis to build stronger customer experiences with each and every input.

Related blog posts

Everything You Need to Know About The Job Market After Covid 🦠

In the last two years, our work practices have undergone some significant changes. At the present time, there appears to be an increase in the number of job switches. This article examines the reasons for this by analyzing various studies – as well as our own in-house survey.

Customer Review Champion: Adidas vs Nike

Who is the GOAT, Messi or Ronaldo? Are you a Derrick Rose or LeBron James fan? Do you prefer Collin Morikawa or Rory McIlroy? Depending on your answers, it is likely we could predict your favorite footwear brand. How?

How to Collect Surveys and Conduct Feedback Analysis in 1 Day

The research world is like a toolbox. Within the toolbox are surveys, focus groups, and experiments. Recently, the size of this toolbox has increased with social media, internet-based surveys, and mobile phones being used more frequently...

Five Guys’ Marketing Strategy: A Customer Review Analysis

Five Guys caters to burger lovers seeking high-quality food and friendly service – all while keeping things simple. To understand how Five Guys operates its successful marketing strategy, we analyzed 1,236 Google Maps reviews using the text analysis tool, Caplena.

Analyzing Netflix Open-Ended Feedback: A 3½-Step Video Tutorial

This tutorial is all about Netflix! : We’ll take you through every step required to evaluate your open texts with the help of AI. If you follow the 3 required steps described in the guide below in all detail, it will take you approximately 45 minutes to get to achieve these results.

Everything You Need to Know About The Job Market After Covid 🦠

In the last two years, our work practices have undergone some significant changes. At the present time, there appears to be an increase in the number of job switches. This article examines the reasons for this by analyzing various studies – as well as our own in-house survey.

Customer Review Champion: Adidas vs Nike

Who is the GOAT, Messi or Ronaldo? Are you a Derrick Rose or LeBron James fan? Do you prefer Collin Morikawa or Rory McIlroy? Depending on your answers, it is likely we could predict your favorite footwear brand. How?

How to Collect Surveys and Conduct Feedback Analysis in 1 Day

The research world is like a toolbox. Within the toolbox are surveys, focus groups, and experiments. Recently, the size of this toolbox has increased with social media, internet-based surveys, and mobile phones being used more frequently...

Five Guys’ Marketing Strategy: A Customer Review Analysis

Five Guys caters to burger lovers seeking high-quality food and friendly service – all while keeping things simple. To understand how Five Guys operates its successful marketing strategy, we analyzed 1,236 Google Maps reviews using the text analysis tool, Caplena.

Analyzing Netflix Open-Ended Feedback: A 3½-Step Video Tutorial

This tutorial is all about Netflix! : We’ll take you through every step required to evaluate your open texts with the help of AI. If you follow the 3 required steps described in the guide below in all detail, it will take you approximately 45 minutes to get to achieve these results.